A Message from Dr. Kuchera-MorinLearn more

Dr. JoAnn Kuchera-Morin, chief designer of the facility, composer, and media artist with over thirty filve years of experience in media systems engineering, outlines the vision for our research.

(Please make sure you extract the files to disk before running)

(Please make sure you extract the files to disk before running)

(If you are experiencing performance issues, or have an older computer, please try the "pre-rendered" audio version)

The AlloLib Software System is the underlying media infrastructure that drives the instrument, an integrated collection of C++ libraries for interactive immersive computation, visualization/sonification, as well as a reactive system connected to the python scripting language and the Jupyter notebook. This system functions as a complete workflow design from the HPC cluster or the Cloud, to the Desktop. Licensed by the Regents of the University of California, AlloLib is the open source software core for distributed high performance multimedia computing. More info

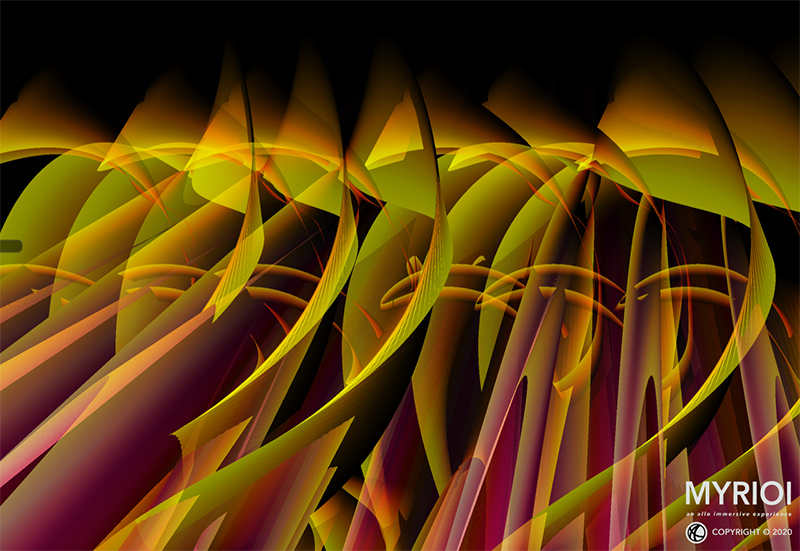

MYRIOI is an interactive, immersive, shared narrative that drives the further evolution of our AlloPortal/AlloLib instrument/environment for artistic/scientific content exploration. The AlloPortal seamlessly ties together projection-based multi-user VR with HMDs for the shared experience, full FOV unencumbered, projection-based VR, facilitating groups of people immersed in the same world, tied to HMDs for a shared embodied/dis-embodied experience, this world, involving further evolution of our hydrogen-like atom application. The evolution of our quantum mechanical system through the interactive/immersive composition MYRIOI facilitated the extension of AlloPortal to intricate SAR control for a robust multi-user shared experience, and expansion of FOV encompassing the environment.

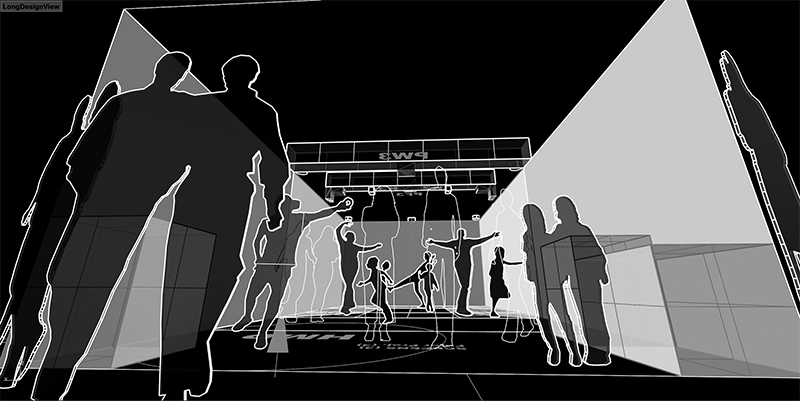

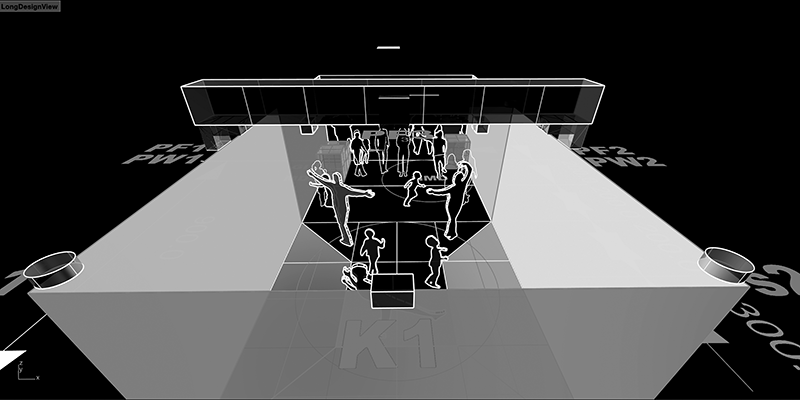

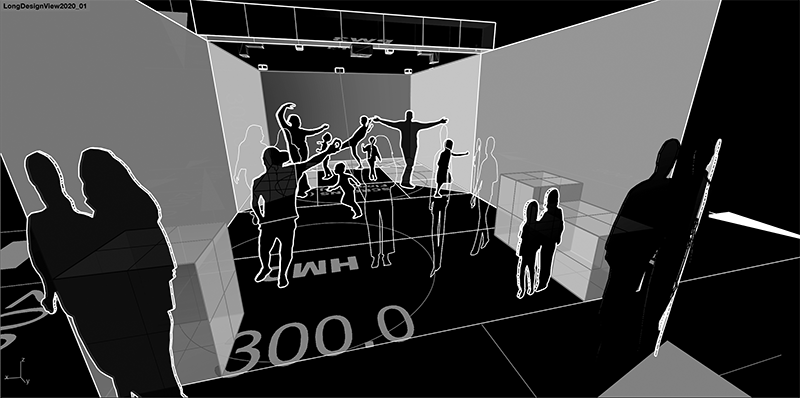

What would it be like to have a shared VR experience and to be present, to really feel presence together in immersive worlds unimagined, from the atomic to the cosmic? AlloPortal is an immersive instrument/installation that is constructed based on our research in designing the AlloSphere instrument and AlloLib software. We implement various versions of the AlloSphere instrument based on the installation environment provided, and demonstrate our narrative, MYRIOI - "innumerable" ("myriad particles”): To share the experience of being immersed and interacting with myriads of particles that create currents, becoming waveforms, to understand and to really experience viscerally, the quantum, sharing and interacting with this narrative. A shared experience that will allow a group of users to see themselves and each other and to passively experience or interact with the world of the quantum: waveforms, light, the pure essence of form and shape. The distributed projection system also connects to an HMD for a dis-embodied shared experience of this same narrative. The HMD will be the intersection of the flow of dynamic form and dynamically moving virtual sculpture with the fabrication of the prominent theme in material form.

AlloPortal will be constructed for this immersive, shared experience and MYRIOI will extend the SAR construction of the installation to include robust tracking for multi-user manipulation of the narrative. FOV will be expanded for full peripheral immersion, essential for “feeling present” in the immersive world. FOV must be expansive enough to convince a group that they are experiencing and “in” the same world. The dis-embodied experience can be viewed by a number of people if more than one HMD is connected to the distributed system. The dis-embodied experience will facilitate the HMD user to explore very intricately each form and shape from a very different view than the group experiencing the projection-based narrative. We present our studies in composing elementary wavefunctions of a hydrogen-like atom and identify several relationships between physical phenomena and musical composition that helped guide the process. The hydrogen-like atom accurately describes some of the fundamental quantum mechanical phenomena of nature and supplies the composer with a set of well-defined mathematical constraints that can create a wide variety of complex spatiotemporal patterns. We explore the visual appearance of time-dependent combinations of two and three eigenfunctions of an electron with spin in a hydrogen-like atom, highlighting the resulting symmetries and symmetry changes.

MYRIOI will take these wavefunction combinations to the highest level of counterpoint, myriads or particles forming waves of light interactively and immersively visualized and experienced by a shared community who are present and active in a world that they could only experience in an instrument/environment built solely for unencumbered group-user experience in a Virtual World.

Virtual Reality Interactive Installation including an HMD Version for SIGGRAPH 2020

2019-2020

Much thanks to the members of the AlloSphere Research Group for the design and development of the AlloLib software.

This research was possible thanks to the support of Robert W. Deutsch Foundation, The Mosher Foundation, the National Science Foundation under Grant numbers 0821858, 0855279, and 1047678, and the U.S. Army Research Laboratory under MURI Grant number W911NF-09-1-0553.

immersive multi-modal installation, interactive immersive,multimedia art work, immersive multi-modal multimedia system installation

The AlloLib software system is open source software design by the AlloSphere Research Group and licensed by the Regents of the University of California on GitHub (https://github.com/AlloSphere-Research-Group/allolib).

The current AlloLib libraries provide a toolkit for writing cross-platform audio/visual applications in C++, with tools to assist the synchronization of sound with graphics rendering required for distributed performance, interactive control, as well as a rich set of audio spatializers. The AudioScene infrastructure allows the use of vector-based amplitude panning VBAP, distance-based amplitude panning (DBAP), or higher order ambisonics as the backend diffusion techniques (McGee 2016). The Gamma library provides a set of C++ unit-generator classes with a focus on the design of audiovisual systems (Putnam 2014). The Gamma library allows synchronization of generators and processors to different “domains,” permitting them to be used at frame or audio rate in different contexts, i.e., there is no need to downsample signals for the visuals as the signals and processes can be running at video rate within the graphics functions. The Gamma application programming interfaces (APIs) are also consistent with the rest of the graphics software, which makes learning and integrating the systems simpler. Generic spatializers and panners designed around the concept of scenes with sources and listeners are employed. Similar to the way a 3-D graphics scene is described to the graphics rendering that can then render through different “cameras” with different perspectives, an audio scene can be listened to by multiple “listeners” using different spatialization techniques and perspectives to render the sound. The AlloLib library, which is designed to support distributed graphics rendering, can also be readily used for distributed audio rendering, taking advantage of the rendering cluster’s computing power for computationally intensive audio processing. This is potentially useful for sonification of extremely large data sets, where the synthesis of each sound agent can be performed in parallel on separate machines, allowing for rendering that is both more complex and more nuanced. The system is completely multi-user and interactive.

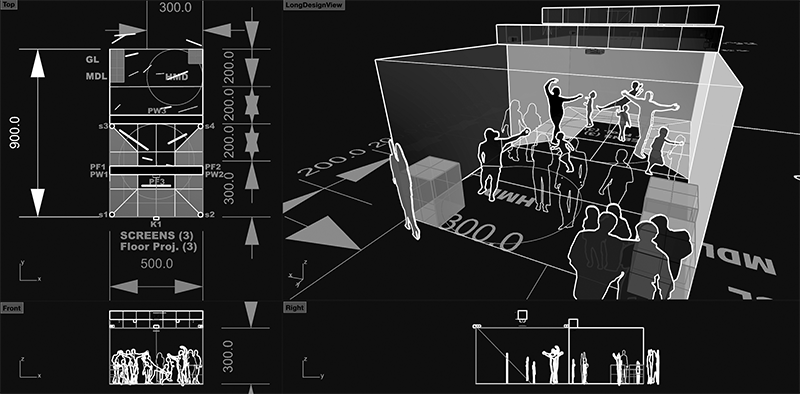

MYRIOI Installation Technical Equipment & Space Requirements (Abbreviated)